Nvidia’s streaming software now has an option to make it appear like you’re making eye contact with the camera, even if you’re looking somewhere else in real life. Using AI, the “Eye Contact” feature added to Nvidia Broadcast 1.4 will replace your eyes with “simulated” ones that are aligned with your camera — an effect that worked really well when we tested it ourselves, except for all the times it didn’t.

In an announcement post, the company writes the feature is meant for “content creators seeking to record themselves while reading their notes or a script” without having to look directly at a camera. Pitching it as something you’d use during a public performance, instead of something you’d use socially, does kind of sidestep the dilemmas that come with this sort of tech. Is it rude to use AI to trick my mom into thinking I’m engaged in our video call when I’m actually looking at my phone? Or, to make my boss think I’m not writing an article on my other monitor during a meeting? (I’m going to say yes, given that getting caught in either scenario would land me in hot water.)

Nvidia suggests that Eye Contact will try to make your simulated eyes match the color of your real ones, and there’s “even a disconnect feature in case you look too far away.”

Here’s a demo side by side with an unedited stream, so you can compare how my eyes are actually moving to how Nvidia’s software renders them:

Looking at the results I got, I’m not a huge fan of Eye Contact — I think it makes things just look a little off. Part of that is the animated eye movement. While it’s very cool that it’s even possible, it sometimes ends up making it look like my eyes are darting around at superhuman speeds. There’s also the odd, very distracting pop-ins that you can see near the end of the video.

There were definitely a few times when the feature got it right, and when it did, it was very impressive. Still, the misses were too frequent and noticeable for me to use this the next time I show up to a meeting (though, in theory, I could).

Nvidia does label the feature as a beta and is soliciting feedback from community members to help it improve. “There are millions of eye colors and lighting combinations,” the company says. “If you test it and find any issues, or just want to help us develop this AI effect further, please send us a quick video here, we would really appreciate it!”

Nvidia has been leaning heavily into this sort of AI generation in recent years — a major selling point of its graphics cards is DLSS, a feature that uses machine learning to intelligently upscale images, adding information that’s not there when you go to a lower (but easier to run) resolution. The latest version, DLSS 3, generates and inserts entirely new frames into your gameplay, like how Broadcast generates and adds a new pair of eyes to your face.

Broadcast also has other AI-powered features, such as background replacement that works as a virtual green screen and the ability to clean up background noises that your microphone picks up.

This isn’t the first eye contact feature we’ve seen. Apple started testing a similar feature called “Attention Correction” for FaceTime in 2018. In current versions of iOS, it’s labeled as “Eye Contact” in Settings > FaceTime. Microsoft also has a version of the feature in Windows 11 for devices with a neural processing unit.

Eye Contact isn’t the only feature Nvidia added to Broadcast version 1.4. The latest update also brings a vignette effect that Nvidia says is similar to Instagram’s and improves the Blur, Replacement, and Removal Virtual Background effects. The update is currently available to download for anyone with an RTX graphics card.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24350869/ezgif_5_2665551032.gif) Gif: Jay Peters / The Verge

Gif: Jay Peters / The Verge

/cdn.vox-cdn.com/uploads/chorus_asset/file/24348505/docs_npc_post.gif) Gif: Google

Gif: Google

/cdn.vox-cdn.com/uploads/chorus_asset/file/24347792/Screenshot_2023_01_09_at_12.48.16.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/24322143/Lenovo_Thinkphone_by_Motorola__6_.png) Image: Motorola

Image: Motorola

/cdn.vox-cdn.com/uploads/chorus_asset/file/24342190/2022_Bronco_BasicPack_Carbon_Black_Pdp_Hero_e1672860007676.png) Image: Lenovo

Image: Lenovo

/cdn.vox-cdn.com/uploads/chorus_asset/file/24342215/saibatso.jpg) Screenshot by Sean Hollister / The Verge

Screenshot by Sean Hollister / The Verge

/cdn.vox-cdn.com/uploads/chorus_asset/file/24342230/71340220_2708653779193367_6734135478880043008_n.jpg) Image:

Image: /cdn.vox-cdn.com/uploads/chorus_asset/file/24342073/gameblaster.jpg) Image via

Image via /cdn.vox-cdn.com/uploads/chorus_asset/file/24342150/creative_labs_sound_blaster_audigy_2_zs_platinum_pro_972606.jpg)

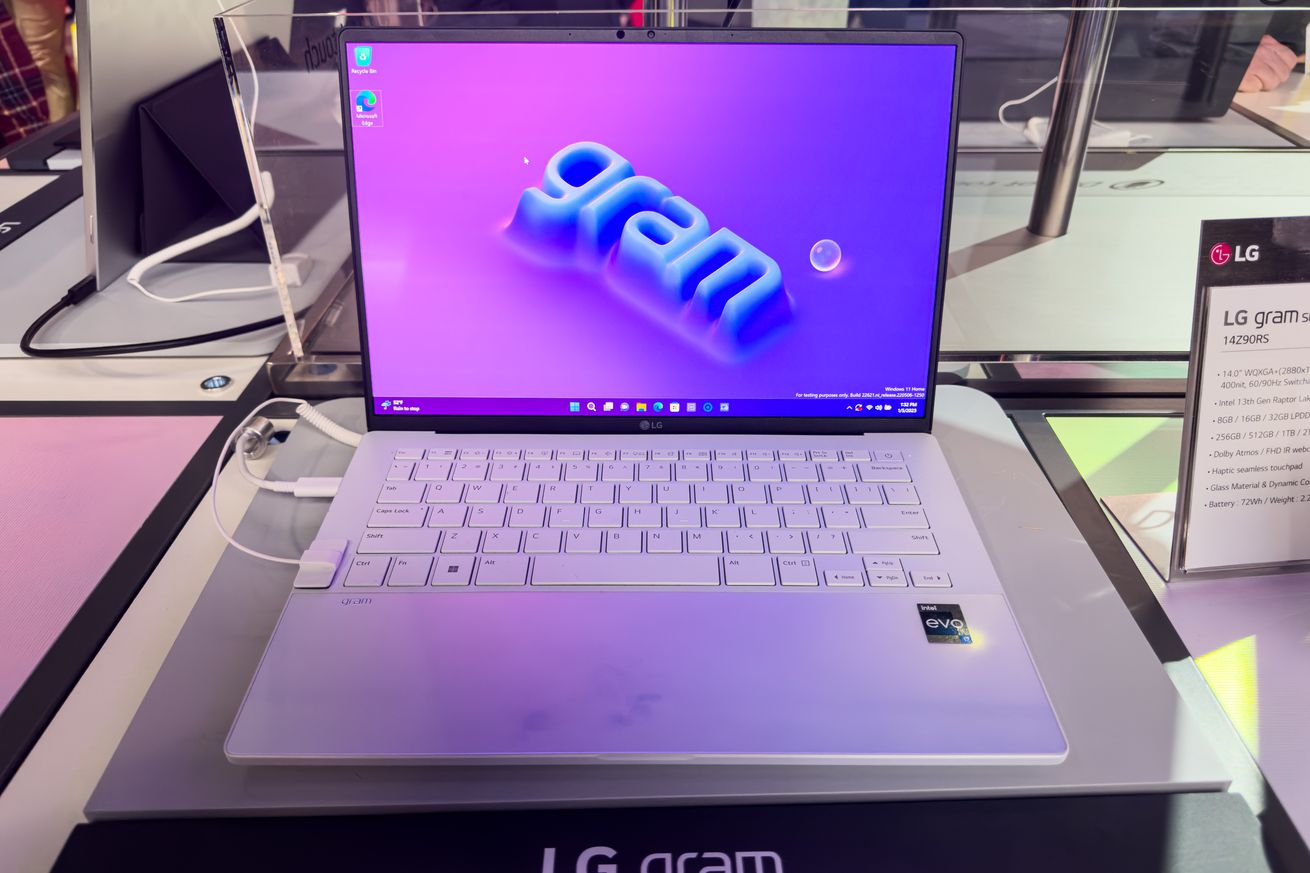

/cdn.vox-cdn.com/uploads/chorus_asset/file/24340727/226474_LG_Gram_Style_MChin_0004.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/24340726/226474_LG_Gram_Style_MChin_0003.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/24340728/226474_LG_Gram_Style_MChin_0005.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/24340729/226474_LG_Gram_Style_MChin_0006.jpg)